Trafficanalysis Using Debian Lenny

By using my Network Monitoring Appliance we noticed a link in MRTG always under heavy load. On this link a lot of different traffic aggregates, so we decided to analyze of what quantities of protocols and therefore applications the cumulative traffic consists.

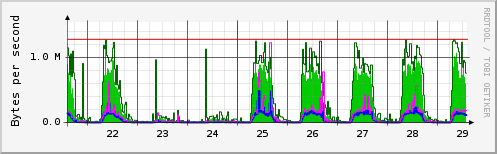

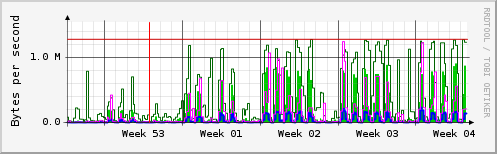

As you can easily see, there is a lot of traffic on each day other than on weekend, and on holidays.

One OSS application which could do that is ntop. Excerpt from www.ntop.org:

"ntop is a network traffic probe that shows the network usage, similar to what the popular top Unix command does. ntop is based on libpcap and it has been written in a portable way in order to virtually run on every Unix platform and on Win32 as well. ntop users can use a a web browser (e.g. netscape) to navigate through ntop (that acts as a web server) traffic information and get a dump of the network status. In the latter case, ntop can be seen as a simple RMON-like agent with an embedded web interface."

Preliminary and Disclaimer

Please be aware that legal rights in some countries may forbid doing things like the ones mentioned in this article, that you do not violate legal rights. Also be aware that the following receipt describes the way we implemented our solution, I do not issue any guaranty that it works for you.

1. The Link

The Link has 10 MBit/s wirespeed and is a routing gateway between 2 sites located a few kilometers apart. A short calculation how much data travels over this link gave the result, that it should be in the range of ~25 GigaByte/Day. On one side of the link are approximately 2000 systems, on the other side about 200, and about a dozend communication relations between both sides of interest for us. Later we noticed that about 40-50 million packages travel over this link every day from 7am-5pm.

As ntop analyzes all traffic and shows communication relations, for example in the way of top-talkers and the like we assumed that ntop would use a lot of RAM to build tables about all communication relations we thought that the probe should have as much RAM as possible.

2. The Probe

We decided to use an old unused box, do a minimal install of Debian Lenny and use it as ntop probe. We only did a minimal install because we did not want to waste the precious RAM for X11 or other useless applications, useless for this use-case. We decided to use Debian because it is easy adaptable to our needs, and it is known for it's stability. But you can build a probe as the described here with every other Linux Distribution you are familiar with, also the *BSD's may also be a good foundation.

We decided to place this probe near the router which handles this link, and to configure a mirror-port to get access to the whole traffic running on this link.

We equipped the probe with a second NIC, because we need one NIC for traffic-capturing, and wanted to be able to administer the probe remotely by SSH. The first NIC (eth0) was configured without an IP adress, because it is only used to capture the traffic from the mirrorport of the router, and not to do any active communication. Furthermore eth0 has to brought into promiscous-mode (to see all traffic), which is done by libpcap. The second NIC (eth1) which is used only for remote administration was configured with a static IP adress.

Fortunately the old box which was used as probe was equipped with 2GB RAM (which was enough), an AMD Athlon(tm) 64 Processor 3800+, and a local 80GB disc. The disc was partioned this way:

# fdisk -l

Disk /dev/sda: 80.0 GB, 80026361856 bytes

255 heads, 63 sectors/track, 9729 cylinders

Units = cylinders of 16065 * 512 = 8225280 bytes

Disk identifier: 0x37aa37aa

Device Boot Start End Blocks Id System

/dev/sda1 1 131 1052226 83 Linux

/dev/sda2 132 162 249007+ 82 Linux swap / Solaris

/dev/sda3 163 9729 76846927+ 5 Extended

/dev/sda5 163 9729 76846896 83 Linux

/dev/sda1 is a 1GB partition for Lenny, /dev/sda2 a small swap-partition, and /dev/sda5 to be used for capturefiles. Filesystems are ext3, but xfs may also be a solid candidate for such large files.

3. Offline Reporting

ntop is able to analyse traffic on the fly, or it's also able to read pcap files for later analysis and reporting.

We decided to first use the offline approach, to capture the traffic on the link with tcpdump from 7am-5pm by a short shellscript driven by cron, and to do the analysis in a second step.

The shellscipt looks like a Sys-V initscript:

#!/bin/sh

PATH=/sbin:/usr/sbin:/bin:/usr/bin

do_start() {

ifconfig eth0 up;

tcpdump -i eth0 -w /media/capture/`date +%F_%R`_tcpdump.pcap &

}

do_stop() {

pkill -SIGTERM tcpdump;

ifconfig eth0 down;

}

case "$1" in

start)

do_start 2>&1

;;

stop)

do_stop

;;

*)

echo "Usage: $0 start|stop" >&2

exit 3

;;

esac

Capturing traffic from 7am-5pm produces files in the size of ~30GB:

-rw-r--r-- 1 root root 32725662515 Jan 14 17:00 2020-01-14_07:00_tcpdump.pcap

Reading a capturefile is done by

ntop -m 10.80.192.0/18,10.81.20.0/24 -f /media/capture/2010-01-13_10\:30_tcpdump.pcap -n -4 -w3000 --w3c -p /etc/ntop/protocol.list

For a detailled explanation of ntop's commandline switches and parameters please have a look in it's manpage, and adjust them to your needs.

You can then examine the report of ntop in the webbrowser on port 3000 of the system where ntop is running.

4. Online Reporting

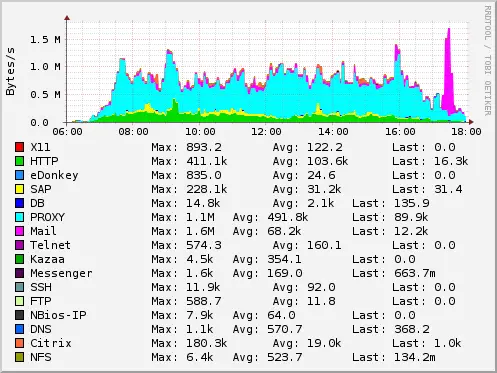

Another possibility to use ntop is that ntop itself captures the traffic on eth0 and does online reporting on the fly, so that you can see whats going on a few seconds later, and (with activated rrd-plugin) to get really nice, amazing (management friendly !) graphs where you can zoom in with your mouse.

5. First Conclusions

As expected we saw that approximately 70% of the whole traffic was induced by one system, the Internet Proxy. Traffic associated with productive applications was in the range of 3-5%. To make the situation worse this productive traffic is associated with interactive applications, like Citrix, Telnet and SAP. We decided that the next step should be to prioritize/shape the traffic with the help of (for instance) diffserv, or TOS mangling.

6. ngrep

We now decided to analyze the proxy related traffic, which Websites would be the top-visited ones. Such deeper analysis is not possible with ntop, but with ngrep it's easy to capture only the traffic which (for instance) goes to one system or comes/goes to one port, or much more. Ngrep is also capable to search for expressions in the payload, so the next approach was to use ngrep to capture only the Internet Proxy related traffic and further analyse it.

This is simply done by

ngrep -d eth0 host 10.89.1.17 -O /media/capture/snap.pcap

Needless to say that this simple kind of capturing could also be done with tcpdump.

When done from 7am-5pm this could produce a file of ~14GB:

-rw-r--r-- 1 root root 14223354675 Jan 25 16:26 snap.pcap

Now this file must be analyzed. The idea is

* read the file with tcpdump, dump it to stdout,

* grep for "get http://",

* cut out the FQDN of the sites,

* throw away duplicated entries which are in short series (because they may belong to one click in the browser resulting in a line of consecutive get's),

* and count and sort this data according to their occurence.

This could be done in several steps using intermediate files, or be one command where several commandline tools are piped together:

tcpdump -r snap.pcap -A | grep -i "get http://" | awk '/http/ { print $2 }' | cut -d/ -f1-3 | grep http | sed '$!N; /^\(.*\)\n\1$/!P; D' | sort | uniq -c | sort -r > urls.txt

This runs for a while and you get a file sorted in descending order how often the sites found in the capturefile are visited. I'm not 100% sure wether all our assumptions made above are handled right, but the numbers in the file look feasible.

13418 http://www.gxxxxx.dx 10184 http://www.gxxxxx-axxxxxxxx.cxx 8281 http://www.fxxxxxxx.dx 5470 http://www.bxxx.dx 4269 http://www.sxxxxxx.dx 2550 http://www.gxxx.cxx 2047 http://www.bxxxxxxx-zxxxxxx.dx 2044 http://www.fxxxxxxx.cxx 1618 http://www.exxxxxxx.dx 1410 http://www.lx-bx.dx ....

Another way to get a report about visited Websites would be to interpret the logfile of the Internet Proxy, maybe with Calamaris (in the case of Squid or a Proxy which produces Calamaris processable logs). Without a proxy log I'm not aware how such a report could be produced.

Last but not least we strongly like to emphasize that no user related informations have been evaluated in this project.