A Beginner's Guide To LVM - Page 6

On this page

6 Return To The System's Original State

In this chapter we will undo all changes from the previous chapters to return to the system's original state. This is just for training purposes so that you learn how to undo an LVM setup.

First we must unmount our logical volumes:

umount /var/share

umount /var/backup

umount /var/media

df -h

server1:~# df -h

Filesystem Size Used Avail Use% Mounted on

/dev/sda2 19G 665M 17G 4% /

tmpfs 78M 0 78M 0% /lib/init/rw

udev 10M 92K 10M 1% /dev

tmpfs 78M 0 78M 0% /dev/shm

/dev/sda1 137M 17M 114M 13% /boot

Then we delete each of them:

lvremove /dev/fileserver/share

server1:~# lvremove /dev/fileserver/share

Do you really want to remove active logical volume "share"? [y/n]: <-- y

Logical volume "share" successfully removed

lvremove /dev/fileserver/backup

server1:~# lvremove /dev/fileserver/backup

Do you really want to remove active logical volume "backup"? [y/n]: <-- y

Logical volume "backup" successfully removed

lvremove /dev/fileserver/media

server1:~# lvremove /dev/fileserver/media

Do you really want to remove active logical volume "media"? [y/n]: <-- y

Logical volume "media" successfully removed

Next we remove the volume group fileserver:

vgremove fileserver

server1:~# vgremove fileserver

Volume group "fileserver" successfully removed

Finally we do this:

pvremove /dev/sdc1 /dev/sdd1 /dev/sde1 /dev/sdf1

server1:~# pvremove /dev/sdc1 /dev/sdd1 /dev/sde1 /dev/sdf1

Labels on physical volume "/dev/sdc1" successfully wiped

Labels on physical volume "/dev/sdd1" successfully wiped

Labels on physical volume "/dev/sde1" successfully wiped

Labels on physical volume "/dev/sdf1" successfully wiped

vgdisplay

server1:~# vgdisplay

No volume groups found

pvdisplay

should display nothing at all:

server1:~# pvdisplay

Now we must undo our changes in /etc/fstab to avoid that the system tries to mount non-existing devices. Fortunately we have made a backup of the original file that we can copy back now:

mv /etc/fstab_orig /etc/fstab

Reboot the system:

shutdown -r now

Afterwards the output of

df -h

should look like this:

server1:~# df -h

Filesystem Size Used Avail Use% Mounted on

/dev/sda2 19G 666M 17G 4% /

tmpfs 78M 0 78M 0% /lib/init/rw

udev 10M 92K 10M 1% /dev

tmpfs 78M 0 78M 0% /dev/shm

/dev/sda1 137M 17M 114M 13% /boot

Now the system is like it was in the beginning (except that the partitions /dev/sdb1 - /dev/sdf1 still exist - you could delete them with fdisk but we don't do this now - as well as the directories /var/share, /var/backup, and /var/media which we also don't delete).

7 LVM On RAID1

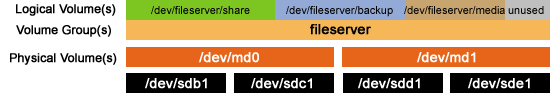

In this chapter we will set up LVM again and move it to a RAID1 array to guarantee for high-availability. In the end this should look like this:

This means we will make the RAID array /dev/md0 from the partitions /dev/sdb1 + /dev/sdc1, and the RAID array /dev/md1 from the partitions /dev/sdd1 + /dev/sde1. /dev/md0 and /dev/md1 will then be the physical volumes for LVM.

Before we come to that, we set up LVM as before:

pvcreate /dev/sdb1 /dev/sdc1 /dev/sdd1 /dev/sde1

vgcreate fileserver /dev/sdb1 /dev/sdc1 /dev/sdd1 /dev/sde1

lvcreate --name share --size 40G fileserver

lvcreate --name backup --size 5G fileserver

lvcreate --name media --size 1G fileserver

mkfs.ext3 /dev/fileserver/share

mkfs.xfs /dev/fileserver/backup

mkfs.reiserfs /dev/fileserver/media

Then we mount our logical volumes:

mount /dev/fileserver/share /var/share

mount /dev/fileserver/backup /var/backup

mount /dev/fileserver/media /var/media

The output of

df -h

should now look like this:

server1:~# df -h

Filesystem Size Used Avail Use% Mounted on

/dev/sda2 19G 666M 17G 4% /

tmpfs 78M 0 78M 0% /lib/init/rw

udev 10M 92K 10M 1% /dev

tmpfs 78M 0 78M 0% /dev/shm

/dev/sda1 137M 17M 114M 13% /boot

/dev/mapper/fileserver-share

40G 177M 38G 1% /var/share

/dev/mapper/fileserver-backup

5.0G 144K 5.0G 1% /var/backup

/dev/mapper/fileserver-media

1.0G 33M 992M 4% /var/media

Now we must move the contents of /dev/sdc1 and /dev/sde1 (/dev/sdc1 is the second partition of our future /dev/md0, /dev/sde1 the second partition of our future /dev/md1) to the remaining partitions, because we will afterwards remove them from LVM and format them with the type fd (Linux RAID autodetect) and move them to /dev/md0 resp. /dev/md1.

modprobe dm-mirror

pvmove /dev/sdc1

vgreduce fileserver /dev/sdc1

pvremove /dev/sdc1

pvdisplay

server1:~# pvdisplay

--- Physical volume ---

PV Name /dev/sdb1

VG Name fileserver

PV Size 23.29 GB / not usable 0

Allocatable yes (but full)

PE Size (KByte) 4096

Total PE 5961

Free PE 0

Allocated PE 5961

PV UUID USDJyG-VDM2-r406-OjQo-h3eb-c9Mp-4nvnvu

--- Physical volume ---

PV Name /dev/sdd1

VG Name fileserver

PV Size 23.29 GB / not usable 0

Allocatable yes

PE Size (KByte) 4096

Total PE 5961

Free PE 4681

Allocated PE 1280

PV UUID qdEB5d-389d-O5UA-Kbwv-mn1y-74FY-4zublN

--- Physical volume ---

PV Name /dev/sde1

VG Name fileserver

PV Size 23.29 GB / not usable 0

Allocatable yes

PE Size (KByte) 4096

Total PE 5961

Free PE 1426

Allocated PE 4535

PV UUID 4vL1e0-sr2M-awGd-qDJm-ZrC9-wuxW-2lEqp2

pvmove /dev/sde1

vgreduce fileserver /dev/sde1

pvremove /dev/sde1

pvdisplay

server1:~# pvdisplay

--- Physical volume ---

PV Name /dev/sdb1

VG Name fileserver

PV Size 23.29 GB / not usable 0

Allocatable yes (but full)

PE Size (KByte) 4096

Total PE 5961

Free PE 0

Allocated PE 5961

PV UUID USDJyG-VDM2-r406-OjQo-h3eb-c9Mp-4nvnvu

--- Physical volume ---

PV Name /dev/sdd1

VG Name fileserver

PV Size 23.29 GB / not usable 0

Allocatable yes

PE Size (KByte) 4096

Total PE 5961

Free PE 146

Allocated PE 5815

PV UUID qdEB5d-389d-O5UA-Kbwv-mn1y-74FY-4zublN

Now we format /dev/sdc1 with the type fd (Linux RAID autodetect):

fdisk /dev/sdc

server1:~# fdisk /dev/sdc

The number of cylinders for this disk is set to 10443.

There is nothing wrong with that, but this is larger than 1024,

and could in certain setups cause problems with:

1) software that runs at boot time (e.g., old versions of LILO)

2) booting and partitioning software from other OSs

(e.g., DOS FDISK, OS/2 FDISK)

Command (m for help): <-- m

Command action

a toggle a bootable flag

b edit bsd disklabel

c toggle the dos compatibility flag

d delete a partition

l list known partition types

m print this menu

n add a new partition

o create a new empty DOS partition table

p print the partition table

q quit without saving changes

s create a new empty Sun disklabel

t change a partition's system id

u change display/entry units

v verify the partition table

w write table to disk and exit

x extra functionality (experts only)

Command (m for help): <-- t

Selected partition 1

Hex code (type L to list codes): <-- L

0 Empty 1e Hidden W95 FAT1 80 Old Minix be Solaris boot

1 FAT12 24 NEC DOS 81 Minix / old Lin bf Solaris

2 XENIX root 39 Plan 9 82 Linux swap / So c1 DRDOS/sec (FAT-

3 XENIX usr 3c PartitionMagic 83 Linux c4 DRDOS/sec (FAT-

4 FAT16 <32M 40 Venix 80286 84 OS/2 hidden C: c6 DRDOS/sec (FAT-

5 Extended 41 PPC PReP Boot 85 Linux extended c7 Syrinx

6 FAT16 42 SFS 86 NTFS volume set da Non-FS data

7 HPFS/NTFS 4d QNX4.x 87 NTFS volume set db CP/M / CTOS / .

8 AIX 4e QNX4.x 2nd part 88 Linux plaintext de Dell Utility

9 AIX bootable 4f QNX4.x 3rd part 8e Linux LVM df BootIt

a OS/2 Boot Manag 50 OnTrack DM 93 Amoeba e1 DOS access

b W95 FAT32 51 OnTrack DM6 Aux 94 Amoeba BBT e3 DOS R/O

c W95 FAT32 (LBA) 52 CP/M 9f BSD/OS e4 SpeedStor

e W95 FAT16 (LBA) 53 OnTrack DM6 Aux a0 IBM Thinkpad hi eb BeOS fs

f W95 Ext'd (LBA) 54 OnTrackDM6 a5 FreeBSD ee EFI GPT

10 OPUS 55 EZ-Drive a6 OpenBSD ef EFI (FAT-12/16/

11 Hidden FAT12 56 Golden Bow a7 NeXTSTEP f0 Linux/PA-RISC b

12 Compaq diagnost 5c Priam Edisk a8 Darwin UFS f1 SpeedStor

14 Hidden FAT16 <3 61 SpeedStor a9 NetBSD f4 SpeedStor

16 Hidden FAT16 63 GNU HURD or Sys ab Darwin boot f2 DOS secondary

17 Hidden HPFS/NTF 64 Novell Netware b7 BSDI fs fd Linux raid auto

18 AST SmartSleep 65 Novell Netware b8 BSDI swap fe LANstep

1b Hidden W95 FAT3 70 DiskSecure Mult bb Boot Wizard hid ff BBT

1c Hidden W95 FAT3 75 PC/IX

Hex code (type L to list codes): <-- fd

Changed system type of partition 1 to fd (Linux raid autodetect)

Command (m for help): <-- w

The partition table has been altered!

Calling ioctl() to re-read partition table.

Syncing disks.

Now do the same with /dev/sde1:

fdisk /dev/sde

The output of

fdisk -l

should now look like this:

server1:~# fdisk -l

Disk /dev/sda: 21.4 GB, 21474836480 bytes

255 heads, 63 sectors/track, 2610 cylinders

Units = cylinders of 16065 * 512 = 8225280 bytes

Device Boot Start End Blocks Id System

/dev/sda1 * 1 18 144553+ 83 Linux

/dev/sda2 19 2450 19535040 83 Linux

/dev/sda4 2451 2610 1285200 82 Linux swap / Solaris

Disk /dev/sdb: 85.8 GB, 85899345920 bytes

255 heads, 63 sectors/track, 10443 cylinders

Units = cylinders of 16065 * 512 = 8225280 bytes

Device Boot Start End Blocks Id System

/dev/sdb1 1 3040 24418768+ 8e Linux LVM

Disk /dev/sdc: 85.8 GB, 85899345920 bytes

255 heads, 63 sectors/track, 10443 cylinders

Units = cylinders of 16065 * 512 = 8225280 bytes

Device Boot Start End Blocks Id System

/dev/sdc1 1 3040 24418768+ fd Linux raid autodetect

Disk /dev/sdd: 85.8 GB, 85899345920 bytes

255 heads, 63 sectors/track, 10443 cylinders

Units = cylinders of 16065 * 512 = 8225280 bytes

Device Boot Start End Blocks Id System

/dev/sdd1 1 3040 24418768+ 8e Linux LVM

Disk /dev/sde: 85.8 GB, 85899345920 bytes

255 heads, 63 sectors/track, 10443 cylinders

Units = cylinders of 16065 * 512 = 8225280 bytes

Device Boot Start End Blocks Id System

/dev/sde1 1 3040 24418768+ fd Linux raid autodetect

Disk /dev/sdf: 85.8 GB, 85899345920 bytes

255 heads, 63 sectors/track, 10443 cylinders

Units = cylinders of 16065 * 512 = 8225280 bytes

Device Boot Start End Blocks Id System

/dev/sdf1 1 3040 24418768+ 8e Linux LVM

Next we add /dev/sdc1 to /dev/md0 and /dev/sde1 to /dev/md1. Because the second nodes (/dev/sdb1 and /dev/sdd1) are not ready yet, we must specify missing in the following commands:

mdadm --create /dev/md0 --auto=yes -l 1 -n 2 /dev/sdc1 missing

server1:~# mdadm --create /dev/md0 --auto=yes -l 1 -n 2 /dev/sdc1 missing

mdadm: array /dev/md0 started.

mdadm --create /dev/md1 --auto=yes -l 1 -n 2 /dev/sde1 missing

server1:~# mdadm --create /dev/md1 --auto=yes -l 1 -n 2 /dev/sde1 missing

mdadm: array /dev/md1 started.