How to Install Apache Spark on Ubuntu 22.04

This tutorial exists for these OS versions

- Ubuntu 22.04 (Jammy Jellyfish)

- Ubuntu 20.04 (Focal Fossa)

On this page

Apache Spark is a free, open-source, and general-purpose data processing engine used by data scientists to perform extremely fast data queries on a large amount of data. It uses an in-memory data store to store queries and data directly in the main memory of the cluster nodes. It offers high-level APIs in Java, Scala, Python, and R languages. It also supports a rich set of higher-level tools such as Spark SQL, MLlib, GraphX, and Spark Streaming.

This post will show you how to install Apache Spark data processing engine on Ubuntu 22.04.

Prerequisites

- A server running Ubuntu 22.04.

- A root password is configured on the server.

Install Java

Apache Spark is based on Java. So Java must be installed on your server. If not installed, you can install it by running the following command:

apt-get install default-jdk curl -y

Once Java is installed, verify the Java installation using the following command:

java -version

You will get the following output:

openjdk version "11.0.15" 2022-04-19 OpenJDK Runtime Environment (build 11.0.15+10-Ubuntu-0ubuntu0.22.04.1) OpenJDK 64-Bit Server VM (build 11.0.15+10-Ubuntu-0ubuntu0.22.04.1, mixed mode, sharing)

Install Apache Spark

At the time of writing this tutorial, the latest version of Apache Spark is Spark 3.2.1. You can download it using the wget command:

wget https://dlcdn.apache.org/spark/spark-3.2.1/spark-3.2.1-bin-hadoop3.2.tgz

Once the download is completed, extract the downloaded file using the following command:

tar xvf spark-3.2.1-bin-hadoop3.2.tgz

Next, extract the downloaded fiel to the /opt directory:

mv spark-3.2.1-bin-hadoop3.2/ /opt/spark

Next, edit the .bashrc file and define the path of the Apache Spark:

nano ~/.bashrc

Add the following lines at the end of the file:

export SPARK_HOME=/opt/spark export PATH=$PATH:$SPARK_HOME/bin:$SPARK_HOME/sbin

Save and close the file then activate the Spark environment variable using the following command:

source ~/.bashrc

Next, create a dedicated user to run Apache Spark:

useradd spark

Next, change the ownership of the /opt/spark to spark user and group:

chown -R spark:spark /opt/spark

Create a Systemd Service File for Apache Spark

Next, you will need to create a service file to manage the Apache Spark service.

First, create a service file for Spark master using the following command:

nano /etc/systemd/system/spark-master.service

Add the following lines:

[Unit] Description=Apache Spark Master After=network.target [Service] Type=forking User=spark Group=spark ExecStart=/opt/spark/sbin/start-master.sh ExecStop=/opt/spark/sbin/stop-master.sh [Install] WantedBy=multi-user.target

Save and close the file then create a service file for Spark slave:

nano /etc/systemd/system/spark-slave.service

Add the following lines:

[Unit] Description=Apache Spark Slave After=network.target [Service] Type=forking User=spark Group=spark ExecStart=/opt/spark/sbin/start-slave.sh spark://your-server-ip:7077 ExecStop=/opt/spark/sbin/stop-slave.sh [Install] WantedBy=multi-user.target

Save and close the file then reload the systemd daemon to apply the changes:

systemctl daemon-reload

Next, start and enable the Spark master service using the following command:

systemctl start spark-master

systemctl enable spark-master

You can check the status of the Spark master using the following command:

systemctl status spark-master

You will get the following output:

? spark-master.service - Apache Spark Master

Loaded: loaded (/etc/systemd/system/spark-master.service; disabled; vendor preset: enabled)

Active: active (running) since Thu 2022-05-05 11:48:15 UTC; 2s ago

Process: 19924 ExecStart=/opt/spark/sbin/start-master.sh (code=exited, status=0/SUCCESS)

Main PID: 19934 (java)

Tasks: 32 (limit: 4630)

Memory: 162.8M

CPU: 6.264s

CGroup: /system.slice/spark-master.service

??19934 /usr/lib/jvm/java-11-openjdk-amd64/bin/java -cp "/opt/spark/conf/:/opt/spark/jars/*" -Xmx1g org.apache.spark.deploy.mast>

May 05 11:48:12 ubuntu2204 systemd[1]: Starting Apache Spark Master...

May 05 11:48:12 ubuntu2204 start-master.sh[19929]: starting org.apache.spark.deploy.master.Master, logging to /opt/spark/logs/spark-spark-org>

May 05 11:48:15 ubuntu2204 systemd[1]: Started Apache Spark Master.

Once you are finished, you can proceed to the next step.

Access Apache Spark

At this point, Apache Spark is started and listening on port 8080. You can check it with the following command:

ss -antpl | grep java

You will get the following output:

LISTEN 0 4096 [::ffff:69.28.88.159]:7077 *:* users:(("java",pid=19934,fd=256))

LISTEN 0 1 *:8080 *:* users:(("java",pid=19934,fd=258))

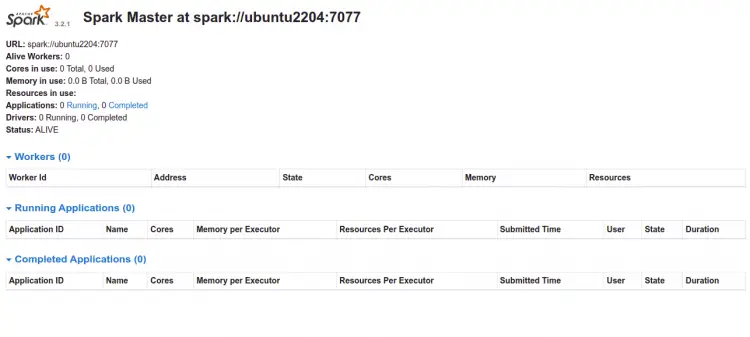

Now, open your web browser and access the Spark web interface using the URL http://your-server-ip:8080. You should see the Apache Spark dashboard on the following page:

Now, start the Spark slave service and enable it to start at system reboot:

systemctl start spark-slave

systemctl enable spark-slave

You can check the status of the Spark slave service using the following command:

systemctl status spark-slave

You will get the following output:

? spark-slave.service - Apache Spark Slave

Loaded: loaded (/etc/systemd/system/spark-slave.service; disabled; vendor preset: enabled)

Active: active (running) since Thu 2022-05-05 11:49:32 UTC; 4s ago

Process: 20006 ExecStart=/opt/spark/sbin/start-slave.sh spark://69.28.88.159:7077 (code=exited, status=0/SUCCESS)

Main PID: 20017 (java)

Tasks: 35 (limit: 4630)

Memory: 185.9M

CPU: 7.513s

CGroup: /system.slice/spark-slave.service

??20017 /usr/lib/jvm/java-11-openjdk-amd64/bin/java -cp "/opt/spark/conf/:/opt/spark/jars/*" -Xmx1g org.apache.spark.deploy.work>

May 05 11:49:29 ubuntu2204 systemd[1]: Starting Apache Spark Slave...

May 05 11:49:29 ubuntu2204 start-slave.sh[20006]: This script is deprecated, use start-worker.sh

May 05 11:49:29 ubuntu2204 start-slave.sh[20012]: starting org.apache.spark.deploy.worker.Worker, logging to /opt/spark/logs/spark-spark-org.>

May 05 11:49:32 ubuntu2204 systemd[1]: Started Apache Spark Slave.

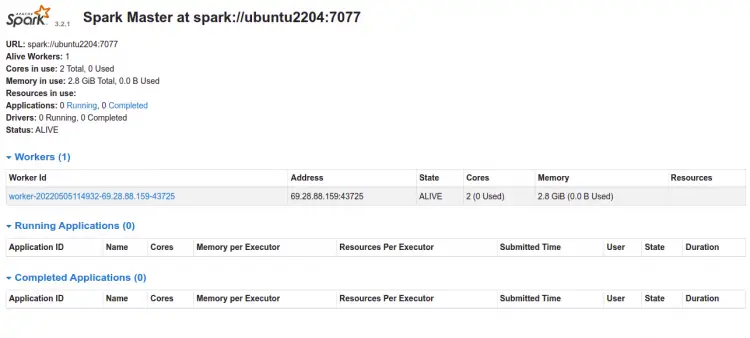

Now, go back to the Spark web interface and refresh the web page. You should see the added Worker on the following page:

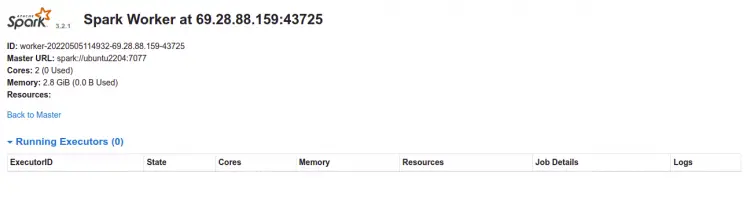

Now, click on the worker. You should see the worker information on the following page:

How to Access Spark Shell

Apache Spark also provides a spark-shell utility to access the Spark via the command line. You can access it with the following command:

spark-shell

You will get the following output:

WARNING: An illegal reflective access operation has occurred

WARNING: Illegal reflective access by org.apache.spark.unsafe.Platform (file:/opt/spark/jars/spark-unsafe_2.12-3.2.1.jar) to constructor java.nio.DirectByteBuffer(long,int)

WARNING: Please consider reporting this to the maintainers of org.apache.spark.unsafe.Platform

WARNING: Use --illegal-access=warn to enable warnings of further illegal reflective access operations

WARNING: All illegal access operations will be denied in a future release

Using Spark's default log4j profile: org/apache/spark/log4j-defaults.properties

Setting default log level to "WARN".

To adjust logging level use sc.setLogLevel(newLevel). For SparkR, use setLogLevel(newLevel).

22/05/05 11:50:46 WARN NativeCodeLoader: Unable to load native-hadoop library for your platform... using builtin-java classes where applicable

Spark context Web UI available at http://ubuntu2204:4040

Spark context available as 'sc' (master = local[*], app id = local-1651751448361).

Spark session available as 'spark'.

Welcome to

____ __

/ __/__ ___ _____/ /__

_\ \/ _ \/ _ `/ __/ '_/

/___/ .__/\_,_/_/ /_/\_\ version 3.2.1

/_/

Using Scala version 2.12.15 (OpenJDK 64-Bit Server VM, Java 11.0.15)

Type in expressions to have them evaluated.

Type :help for more information.

scala>

To exit from the Spark shell, run the following command:

scala> :quit

If you are a Python developer then use the pyspark to access the Spark:

pyspark

You will get the following output:

Python 3.10.4 (main, Apr 2 2022, 09:04:19) [GCC 11.2.0] on linux

Type "help", "copyright", "credits" or "license" for more information.

WARNING: An illegal reflective access operation has occurred

WARNING: Illegal reflective access by org.apache.spark.unsafe.Platform (file:/opt/spark/jars/spark-unsafe_2.12-3.2.1.jar) to constructor java.nio.DirectByteBuffer(long,int)

WARNING: Please consider reporting this to the maintainers of org.apache.spark.unsafe.Platform

WARNING: Use --illegal-access=warn to enable warnings of further illegal reflective access operations

WARNING: All illegal access operations will be denied in a future release

Using Spark's default log4j profile: org/apache/spark/log4j-defaults.properties

Setting default log level to "WARN".

To adjust logging level use sc.setLogLevel(newLevel). For SparkR, use setLogLevel(newLevel).

22/05/05 11:53:17 WARN NativeCodeLoader: Unable to load native-hadoop library for your platform... using builtin-java classes where applicable

Welcome to

____ __

/ __/__ ___ _____/ /__

_\ \/ _ \/ _ `/ __/ '_/

/__ / .__/\_,_/_/ /_/\_\ version 3.2.1

/_/

Using Python version 3.10.4 (main, Apr 2 2022 09:04:19)

Spark context Web UI available at http://ubuntu2204:4040

Spark context available as 'sc' (master = local[*], app id = local-1651751598729).

SparkSession available as 'spark'.

>>>

Press the CTRL + D key to exit from the Spark shell.

Conclusion

Congratulations! you have successfully installed Apache Spark on Ubuntu 22.04. You can now start using Apache Spark in the Hadoop environment. For more information, read the Apache Spark documentation page.