Low Cost SAN - Page 3

8.2 Initiators (AoE)

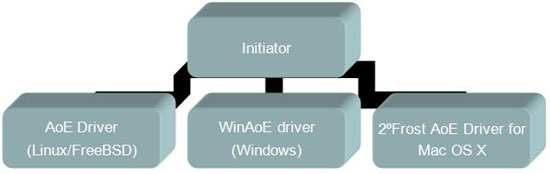

This section describes about available AoE initiators. Client side AoE drivers are available on FOSS for Linux, Solaris, Free BSD as well as for windows. It is also available for Mac OS X, but it's paid. Following diagram shows it more clearly:

The brief description of available AoE initiators is as follows:

Initiators |

Description |

AoE driver |

|

WinAoE driver |

WinAoE is an open source GPLv3 driver for using AoE (ATA over Ethernet) on Microsoft Windows(tm). It can be used for diskless booting of Windows 2000 through Vista 64 from an AoE device (virtual vblade or real Coraid device), or can be used as a general AoE access driver. |

2ºFrost AoE Driver |

2ºFrost AoE Driver provides direct access to shared networked AoE (ATA over Ethernet) storage, transferring raw ethernet packets using the fast, open AoE protocol rather than with the more complex and slower TCP/IP. |

8.3 SAN diagram (based on AoE protocol)

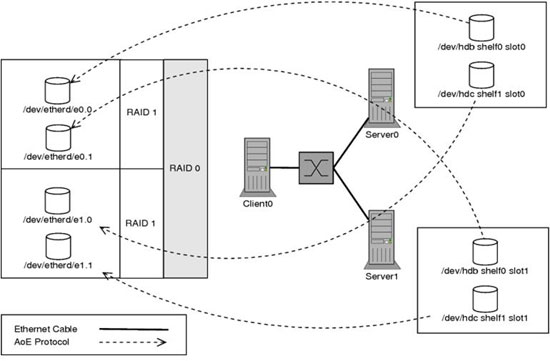

This section describes the basic architecture of our SAN which is based on AoE protocol, AoE target (vblade) and AoE initiator (aoe driver).

In above diagram there are two servers: server0 and server1. Each of them exports two block devices on the network and client node access these block devices as a RAID device. Here from server0 /dev/hdb is exported as /dev/etherd/e0.0 & /dev/hdc is exported as /dev/etherd/e1.0. Similarly from server1, we have exported two block devices: /dev/hdb as /dev/etherd/e0.0 and /dev/hdc as /dev/etherd/e1.1. Now we have four block devices on client side. These are as follows:

• /dev/etherd/e0.0 (from server0)

• /dev/etherd/e0.1 (from server1)

• /dev/etherd/e1.0 (from server0)

• /dev/etherd/e1.1 (from server1)

Now we have combined /dev/etherd/e0.0 & /dev/etherd/e0.1 as a raid device (/dev/md0) having raid level 1 (mirroring) property. Similarly /dev/etherd/e1.0 and /dev/etherd/e1.1 is combined as a raid device (/dev/md1) having raid level 1(mirroring) property. Now we combined these two raid device as a single raid device (/dev/md2) which has raid level 0 (stripping property). So, finally we have a single raid device /dev/md2 (by the combination of four exported block device) on which we can easily make a file system and can use on client side for further work. We can also do volume management before creating the raid device.

8.4 HA/Failover

This section describes about SAN challenges and the available open source solutions against them. The quite obvious challenges of storage networking arena is as follows:

• Storage

• High Availability

• Load Balancing

• High Performance

• Easily Manageable

To achieve these targets, we have a quite reliable tool which is known as RHCS (red hat clustering Suite). Redhat cluster suite can be used in many configurations in order to provide high availability, scalability, load balancing, file sharing, and high performance.

RHCS has following component to achieve above mentioned SAN challenges.

Services |

Functionality |

CMAN |

The main component of RHCS. It controls cluster membership and take care of fencing, resource management, distributed lock management and failover domains. It has its own GUI as well as it is controlled by cluster.conf file. |

GFS/GFS2 |

Global File System ( GFS ) is a shared disk file system for Linux computer clusters. GFS and GFS2 is a cluster aware distributed file systesm which uses Distributed Lock Manager (DLM) for cluster configurations and the "nolock" lock manager for local file systems. |

Piranha/LVS |

RHCS includes lvs (Linux virtual server) with the Piranha management/configuration tool which is used for load balancing. |

Conga |

Conga is basically a cluster administrator web interface which uses luci and ricci daemon. |

DLM/Gulm |

Lock management is a common cluster-infrastructure service that provides a mechanism for other cluster infrastructure components to synchronize their access to shared resources. DLM is distributed lock Manager while GULM is a client-server lock manager. DLM runs in each cluster node; lock management is distributed across all nodes in the cluster. |

Fencing |

It's a phenomenon to fence some devices and nodes if they are failed or corrupted. Basically CMAN controls the fenced daemon. |

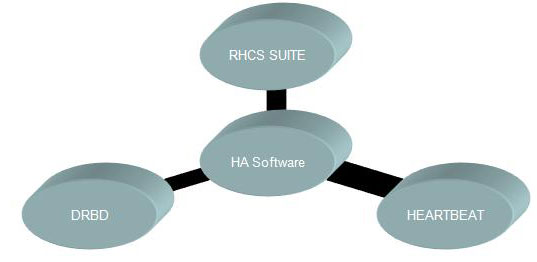

Apart from RHCS suite we have DRBD and HEARTBEAT also available against the HA solution on SAN or in any cluster. They are on FOSS. The brief descriptions of these softwares are as follows:

• DRBD: DRBD ( Distributed Replicated Block Device ) is a distributed storage system for the Linux platform. It consists of a kernel module, several userspace management applications and some shell scripts and is normally used on high availability (HA) clusters. DRBD bears similarities to RAID 1, except that it runs over a network.

• HEARTBEAT: Heartbeat is a daemon that provides cluster infrastructure (communication and membership) services to its clients. This allows clients to know about the presence (or disappearance!) of peer processes on other machines and to easily exchange messages with them. Heartbeat comes with a primitive resource manager (haresources); however it is only capable of managing 2 nodes and does not detect resource-level failures.

DRBD is often deployed together with the Heartbeat cluster manager, although it does integrate with other cluster management. It integrates with virtualization solutions such as Xen, and may be used both within and on top of the Linux LVM stack.

9 iSCSI in SAN

Till now, we have covered all the aspects of SAN but it was basically focused on AoE protocol. iSCSI has its own features and own advantages in SAN. If we need features such as encryption, routability and user-based access in the storage protocol, iSCSI seems to be a better choice. ATA disks are not as reliable as their SCSI counterparts. Therefore iSCSI can be used for SAN creation. The following table covers all the building block of iSCSI in brief:

Components |

Description |

Disks |

SCSI disks |

HBA |

Host Bus Adapter ( Aic 7xxx, QLE4xxx etc) |

OS |

Linux/Windows ( preferably Centos ) |

Protocol |

iSCSI |

iSCSI Targets |

Ardis iSCSI target, Intel iSCSI Target |

iSCSI Initiators |

Ardis iSCSI target & Intel iSCSI initiator ( for Linux ), Microsoft iSCSI initiator ( for windows ) |

Routers, Switches, Offload Engine |

iSCSI offload Engine (ISOE), Security offload Engine ( SOE ), quality routers and a quality switch |

HA/Failover |

RHCS Suite, DRBD , HEARTBEAT |