How to Install Elastic Stack (Elasticsearch, Logstash and Kibana) on CentOS 8

This tutorial exists for these OS versions

- CentOS 8

- CentOS 7

On this page

Elasticsearch is an open-source search engine based on Lucene, developed in Java. It provides a distributed and multitenant full-text search engine with an HTTP Dashboard web-interface (Kibana). The data is queried, retrieved and stored with a JSON document scheme. Elasticsearch is a scalable search engine that can be used to search for all kinds of text documents, including log files. Elasticsearch is the heart of the 'Elastic Stack' or ELK Stack.

Logstash is an open-source tool for managing events and logs. It provides real-time pipelining for data collections. Logstash will collect your log data, convert the data into JSON documents, and store them in Elasticsearch.

Kibana is an open-source data visualization tool for Elasticsearch. Kibana provides a pretty dashboard web interface. It allows you to manage and visualize data from Elasticsearch. It's not just beautiful, but also powerful.

In this tutorial, we will show you step-by-step installing and configuring the 'Elastic Stack' on the CentOS 8 server. We will install and setup the Elasticsearch, Logstash, and Kibana. And then set up the Beats 'filebeat' on clients Ubuntu and CentOS system.

Prerequisites

- CentOS 8 64 bit with 4GB of RAM - elk-master

- CentOS 8 64 bit with 1 GB of RAM - client01

- Ubuntu 18.04 64 bit with 1GB of RAM - client02

What we will do:

- Add Elastic Repository to the CentOS 8 Server

- Install and Configure Elasticsearch

- Install and Configure Kibana Dashboard

- Setup Nginx as a Reverse Proxy for Kibana

- Install and Configure Logstash

- Install and Configure Filebeat

- Testing

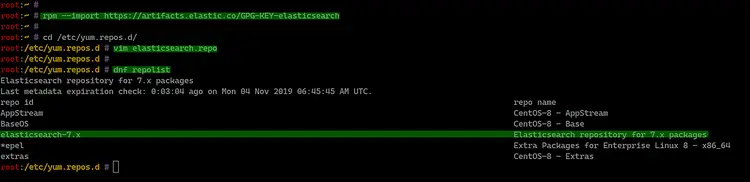

Step 1 - Add Elastic Repository

Firstly, we're going to add the Elasticsearc key and repository to the CentOS 8 server. With the elasticsearch repository provided by the elastic.co, we're able to install elastic products including the Elasticsearch, Logstash, Kibana, and Beats.

Add the elastic key to the system using the following command.

rpm --import https://artifacts.elastic.co/GPG-KEY-elasticsearch

After that, go to the '/etc/yum.repos.d' directory and create a new repository file 'elasticsearch.repo'.

cd /etc/yum.repos.d/

vim elasticsearch.repo

Paste the elasticsearch repository below.

[elasticsearch-7.x]

name=Elasticsearch repository for 7.x packages

baseurl=https://artifacts.elastic.co/packages/7.x/yum

gpgcheck=1

gpgkey=https://artifacts.elastic.co/GPG-KEY-elasticsearch

enabled=1

autorefresh=1

type=rpm-md

Save and close.

Now check all available repository on the system using the dnf command below.

dnf repolist

And you will get the elasticsearch repository that has been added to the CentOS 8 server.

As a result, you can install Elastic products such as Elasticsearch, Logstash, and Kibana, etc.

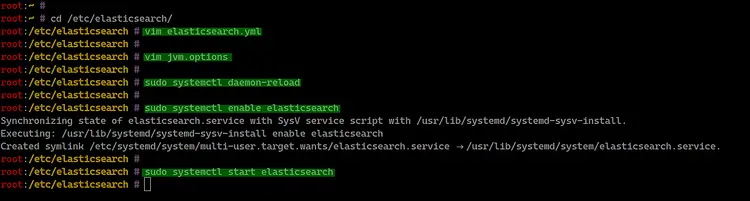

Step 2 - Install and Configure Elasticsearch

In this step, we're going to install and configure the Elasticsearch.

Install the Elasticsearch package using the dnf command below.

sudo dnf install elasticsearch -y

Once the installation is complete, go to the '/etc/elasticsearch' directory and edit the configuration file 'elasticsearch.yml' using vim editor.

cd /etc/elasticsearch/

vim elasticsearch.yml

Uncomment the following lines and change the value for each line as below.

network.host: 127.0.0.1

http.port: 9200

Save and close.

Optionally:

You can tune the elasticsearch by editing the JVM configuration file 'jvm.options' and set up the heap size based on how much memory you have.

Edit the JVM configuration 'jvm.options' using vim editor.

vim jvm.options

Change the min/max heap size via the Xms and Xmx configuration as below.

-Xms512m

-Xmx512m

Save and close.

Next, reload the systemd manager configuration and add the elasticsearch service to the boot time.

sudo systemctl daemon-reload

sudo systemctl enable elasticsearch

Then start the elasticsearch service.

sudo systemctl start elasticsearch

As a result, the elasticsearch is up and running behind the local IP address '127.0.0.1' with the default port '9200' on CentOS 8 server.

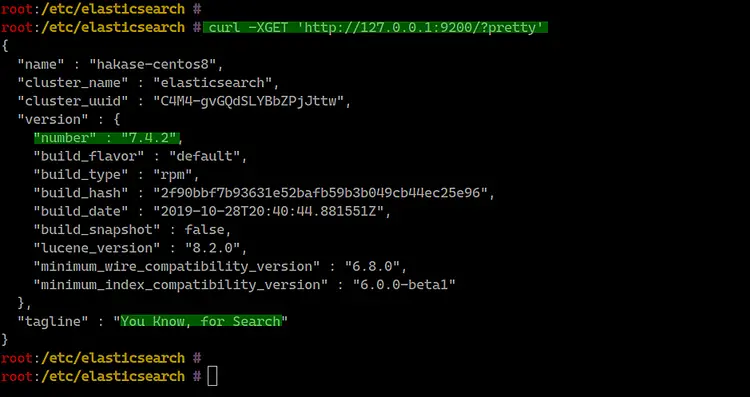

You can check the elasticsearch using the curl command below.

curl -XGET 'http://127.0.0.1:9200/?pretty'

And below is the result that you will get.

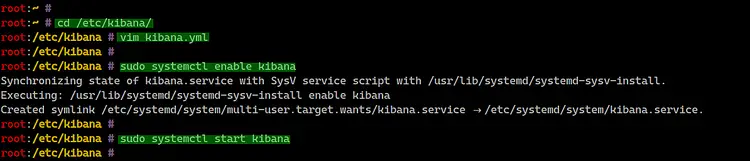

Step 3 - Install and Configure Kibana Dashboard

After installing the elasticsearch, we're going to install and configure the Kibana Dashboard on CentOS 8 server.

Install the Kibana dashboard using the dnf command below.

sudo dnf install kibana

Once the installation is complete, go to the '/etc/kibana' directory and edit the configuration file 'kibana.yml'.

cd /etc/kibana/

vim kibana.yml

Uncomment and change some lines configuration as below.

server.port: 5601

server.host: "127.0.0.1"

elasticsearch.url: "http://127.0.0.1:9200"

Save and close.

Next, add the kibana service to the system boot and start the kibana service.

sudo systemctl enable kibana

sudo systemctl start kibana

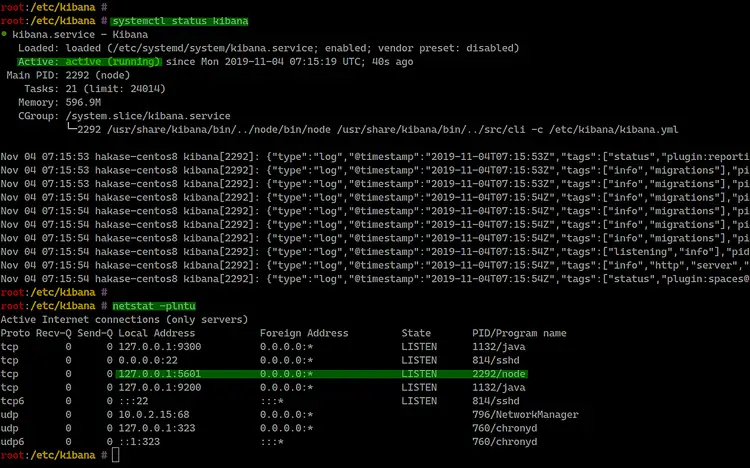

The Kibana service is up and running on the CentOS 8 server, check it using the following commands.

systemctl status kibana

netstat -plntu

And you will get the result as below.

As a result, the Kibana service is up and running default TCP port '5601'.

Step 4 - Setup Nginx as a Reverse Proxy for Kibana

In this step, we're going to install the Nginx web server and set up it as a reverse proxy for the Kibana Dashboard.

Install Nginx and httpd-tools using the dnf command below.

sudo dnf install nginx httpd-tools

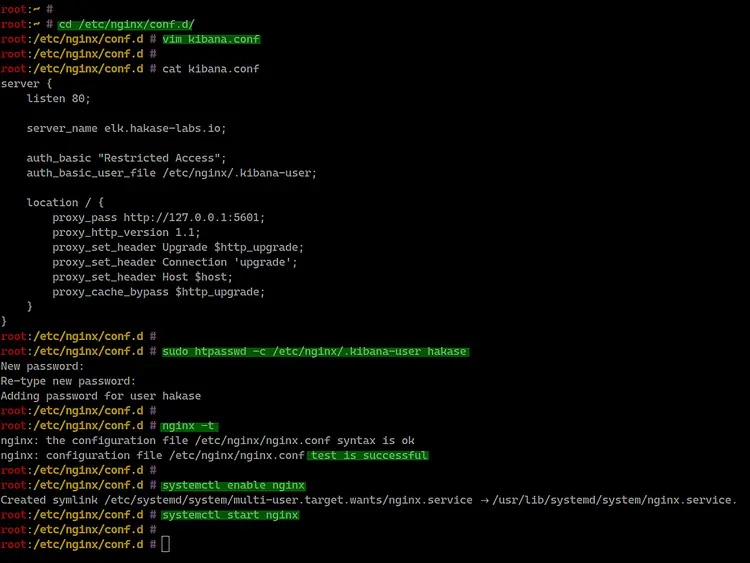

Once the installation is complete, go to the '/etc/nginx/conf.d' directory and create a new configuration file 'kibana.conf'.

cd /etc/nginx/conf.d/

vim kibana.conf

Paste the following configuration.

server {

listen 80;

server_name elk.hakase-labs.io;

auth_basic "Restricted Access";

auth_basic_user_file /etc/nginx/.kibana-user;

location / {

proxy_pass http://127.0.0.1:5601;

proxy_http_version 1.1;

proxy_set_header Upgrade $http_upgrade;

proxy_set_header Connection 'upgrade';

proxy_set_header Host $host;

proxy_cache_bypass $http_upgrade;

}

}

Save and close.

Next, we need to create the basic authentication for securing the Kibana access. Change the 'hakase' user with your own and run the htpasspwd command as below.

sudo htpasswd -c /etc/nginx/.kibana-user hakase

TYPE YOUR PASSWORD

Type your password and test the nginx configuration.

nginx -t

Make sure there is no error.

Now add the nginx service to the system boot and start the nginx service.

systemctl enable nginx

systemctl start nginx

As a result, the Nginx installation and configuration as a reverse proxy for Kibana Dashboard have been completed.

Step 5 - Install and Configure Logstash

In this step, we're going to install and configure the logstash the log shipper. We will install logstash, setup the input beats, setup the syslog filtering using the logstash plugin called 'grok', and then set up the output to elasticsearch.

Install logstash using the dnf command below.

sudo dnf install logstash

Once the installation is complete, go to the '/etc/logstash' directory and edit the JVM configuration file 'jvm.options' using vim editor.

cd /etc/logstash/

vim jvm.options

Change the min/max heap size via the Xms and Xmx configuration as below.

-Xms512m

-Xmx512m

Save and close.

Next, go to the '/etc/logstash/conf.d' directory and create the configuration file for beats input called 'input-beat.conf'.

cd /etc/logstash/conf.d/

vim input-beat.conf

Paste the following configuration.

input {

beats {

port => 5044

}

}

Save and close.

Now create 'syslog-filter.conf' configuration file.

vim syslog-filter.conf

Paste the following configuration.

filter {

if [type] == "syslog" {

grok {

match => { "message" => "%{SYSLOGTIMESTAMP:syslog_timestamp} %{SYSLOGHOST:syslog_hostname} %{DATA:syslog_program}(?:\[%{POSINT:syslog_pid}\])?: %{GREEDYDATA:syslog_message}" }

add_field => [ "received_at", "%{@timestamp}" ]

add_field => [ "received_from", "%{host}" ]

}

date {

match => [ "syslog_timestamp", "MMM d HH:mm:ss", "MMM dd HH:mm:ss" ]

}

}

}

Save and close.

And then create the output configuration for elasticsearch 'output-elasticsearch.conf'.

vim output-elasticsearch.conf

Paste the following configuration.

output {

elasticsearch { hosts => ["127.0.0.1:9200"]

hosts => "127.0.0.1:9200"

manage_template => false

index => "%{[@metadata][beat]}-%{+YYYY.MM.dd}"

document_type => "%{[@metadata][type]}"

}

}

Save and close.

Next, add the logstash service to the system boot and start the logstash service.

systemctl enable logstash

systemctl start logstash

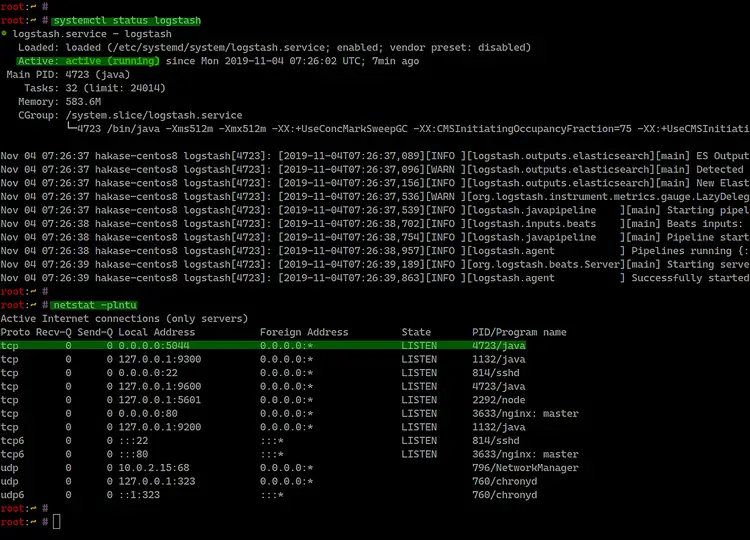

The logstash service is up and running, check using the following commands.

systemctl status logstash

netstat -plntu

And you will get the result as below.

As a result, the logstash log shipper is up and running on the CentOS 8 server with the default TCP port '5044'. And the basic Elastic Stack installation has been completed, and we're ready to ship and monitor our logs to the Elastic (ELK Stack) server.

Step 6 - Install Filebeat on Client

In this step, we're going to show you how to set up the filebeat on the Ubuntu and CentOS system. We will install a filebeat and configure to ship logs from both servers to the Logstash on the elastic server.

- Install Filebeat on CentOS 8

Add the elasticsearch key to the CentOS 8 system using the following command.

rpm --import https://artifacts.elastic.co/GPG-KEY-elasticsearch

Now go to the '/etc/yum.repos.d' directory and create the 'elasticsearch.repo' file using vim editor.

cd /etc/yum.repos.d/

vim elasticsearch.repo

Paste the following configuration.

[elasticsearch-7.x]

name=Elasticsearch repository for 7.x packages

baseurl=https://artifacts.elastic.co/packages/7.x/yum

gpgcheck=1

gpgkey=https://artifacts.elastic.co/GPG-KEY-elasticsearch

enabled=1

autorefresh=1

type=rpm-md

Save and close.

Now install the filebeat using the dnf command below.

sudo dnf install filebeat

Wait for the filebeat installation finished.

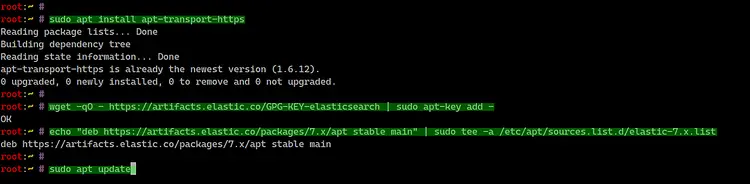

- Install Filebeat on Ubuntu 18.04

Firstly, install the apt-transport-https packages.

sudo apt install apt-transport-https

After that, add the elasticsearch key and repository using the following commands.

wget -qO - https://artifacts.elastic.co/GPG-KEY-elasticsearch | sudo apt-key add -

echo "deb https://artifacts.elastic.co/packages/7.x/apt stable main" | sudo tee -a /etc/apt/sources.list.d/elastic-7.x.list

Now update all repositories and install filebeat to the ubuntu system using the apt command below.

sudo apt update

sudo apt install filebeat

Wait for the filebeat installation finished.

- Configure Filebeat

The filebeat configuration located at the '/etc/filebeat' directory. Go to the filebeat directory and edit the 'filebeat.yml' configuration file.

cd /etc/filebeat/

vim filebeat.yml

Now disable the default elasticsearch output as below.

#output.elasticsearch:

# Array of hosts to connect to.

# hosts: ["127.0.0.1:9200"]

Then enable the logstash output and specify the logstash host IP address.

output.logstash:

# The Logstash hosts

hosts: ["10.5.5.25:5044"]

Save and close.

Next, we need to enable filebeat modules. Run the filebeat command below to get the list of filebeat modules.

filebeat modules list

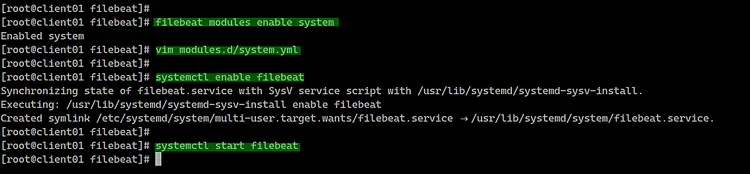

Enable the 'system' module using the following command.

filebeat modules enable system

The filebeat system module has been enabled with the configuration file 'modules.d/system.yml'.

Edit the system module configuration using vim editor.

cd /etc/filebeat/

vim modules.d/system.yml

Uncomment the path of the syslog file and the ssh authorization file.

For the CentOS system:

# Syslog

syslog:

enabled: true

var.paths: ["/var/log/messages"]

# Authorization logs

auth:

enabled: true

var.paths: ["/var/log/secure"]

For the Ubuntu system:

# Syslog

syslog:

enabled: true

var.paths: ["/var/log/syslog"]

# Authorization logs

auth:

enabled: true

var.paths: ["/var/log/auth.log"]

Save and close.

Now add the filebeat service to the system boot and start the service.

systemctl enable filebeat

systemctl start filebeat

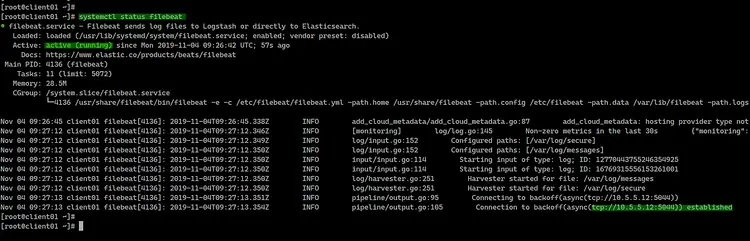

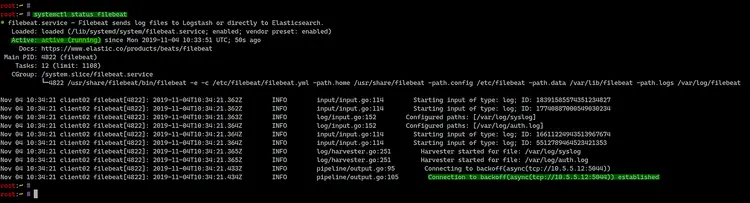

The filebeat service is up and running, you can check using the following command.

systemctl status filebeat

And you will be shown the result as below.

Below is the result from the CentOS 8 server.

And below is from the Ubuntu Server 18.04.

As a result, the connection between filebeat and the logstash service on the elastic stack server IP address '10.5.5.12' has been established.

Step 7 - Testing

Open your web browser and type the Elastic Stack installation domain name on the address bar.

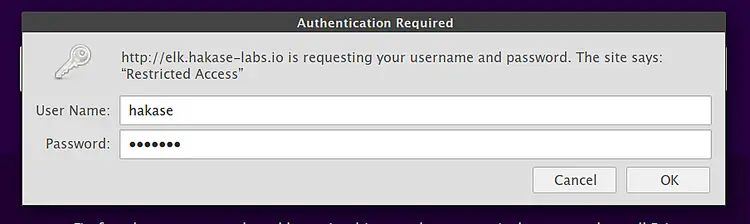

Now log in to the Kibana Dashboard using the basic authentication account that you've created.

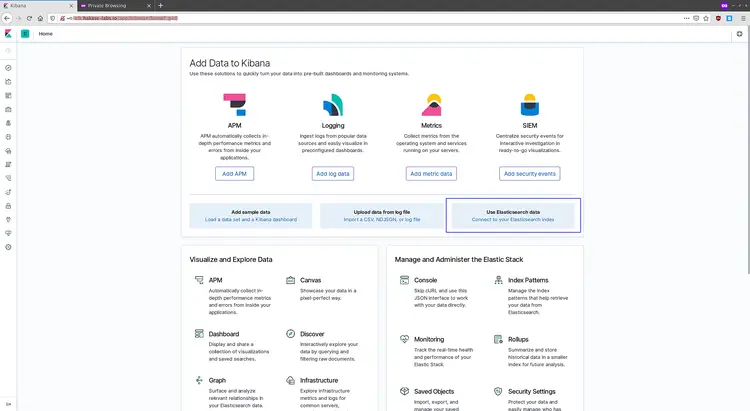

And you will get the Kibana Dashboard as below.

Now connect to the elasticsearch index data that automatically created after the filebeat connected to the logstash. Click on the 'Connect to your Elasticsearch index' link.

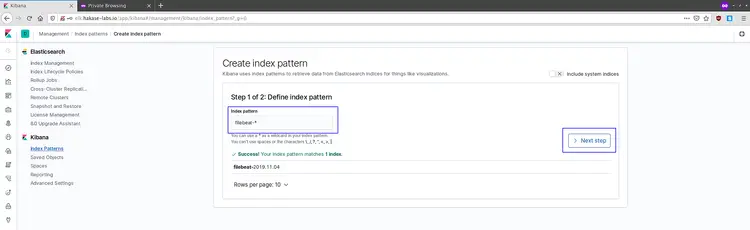

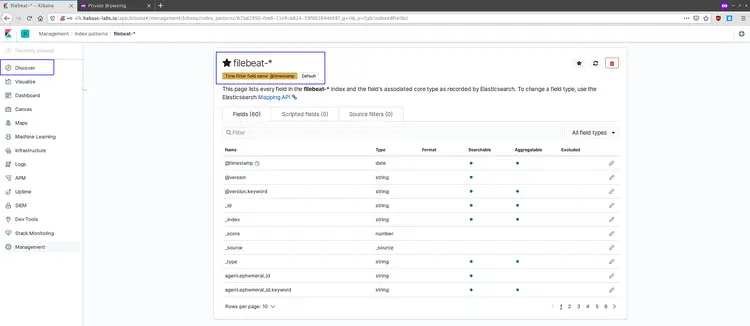

Create the 'filebeat-*' index pattern and click the 'Next step' button.

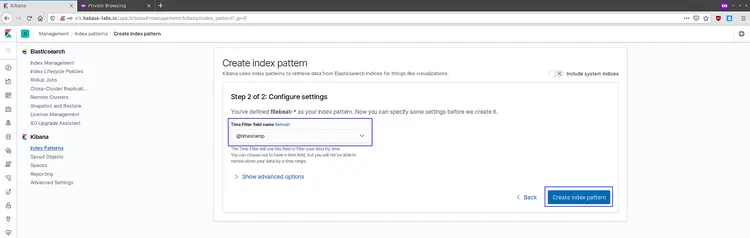

For the filter name, choose the '@timestamp' filter and click the 'Create index pattern'.

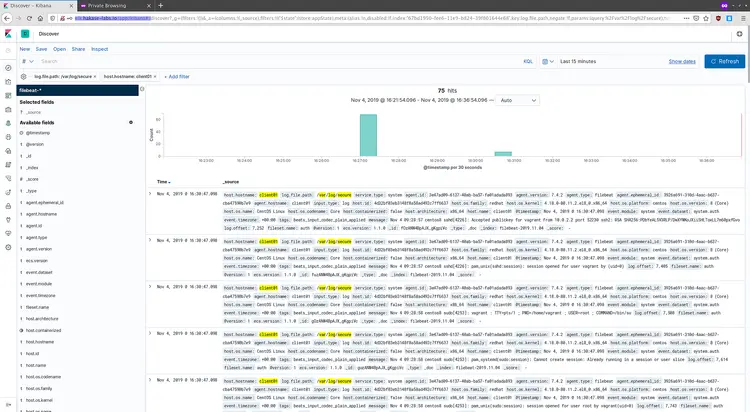

And the 'filebeat-*' index pattern has been created, click the 'Discover' menu on the left.

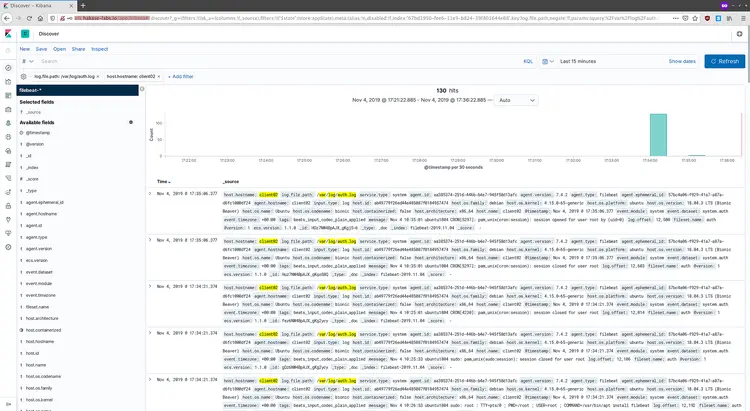

And you will get the log data from filebeat clients as below.

Logs for CentOS 8 system.

Log for the Ubuntu system.

As a result, the log data that defined on the filebeat system module has been shipped to the elastic stack server.

And the Elastic Stack installation and configuration on CentOS 8 has been completed successfully.